Research Overview

The Osborne Laboratory focuses on an eye movement behavior known as smooth pursuit

that allows our eyes to track moving targets. Precision is at a premium in pursuit since failure to exactly match a target's motion causes its image to blur as it slides across the retina. We find that pursuit can be a reliable read-out of the target motion the brain perceives, giving us a means to infer the visual activity that gave rise to the behavioral response. Through simultaneous multi-electrode recording of neural activity in extrastriate cortex and high-resolution recordings of eye movement, we are working to uncover the mechanisms by which visual information is represented in the activity of cortical populations, translated into motor commands, and how the whole system learns and adapts in order to maintain precise behavior.

Visual Motion Processing

To perceive and respond appropriately to sensory stimuli, our brains must interpret the activity of large populations of cortical neurons. In visual motion processing, target direction and speed are estimated by pooling across the population response in cortical extrastriate area MT. However, the intrinsic variability of neural responses poses a problem. To improve the reliability of sensory estimates, the brain must integrate stimulus information over time, pool the responses of many neurons, or both.

Sensory Motor Integration

We have approached the problem of sensory estimation in a noisy neural environment by evaluating the behavioral responses generated by a precise sensory-motor system, smooth pursuit eye movements. In pursuit, the eyes move smoothly to intercept and track a moving target and minimize the slip of the target's image across the retina. To track a moving target, pursuit must detect the onset of motion and form an estimate of the target's direction and speed. Our prior work has suggested that trial-by-trial variation in pursuit arises primarily from errors in estimating the sensory parameters of target motion, while the motor side of the system follows those erroneous estimates loyally (Osborne et al. 2005, 2007).

Current Research Projects

1. Video game project

|

|

Video game with subject's eye position superimposed as a red cross |

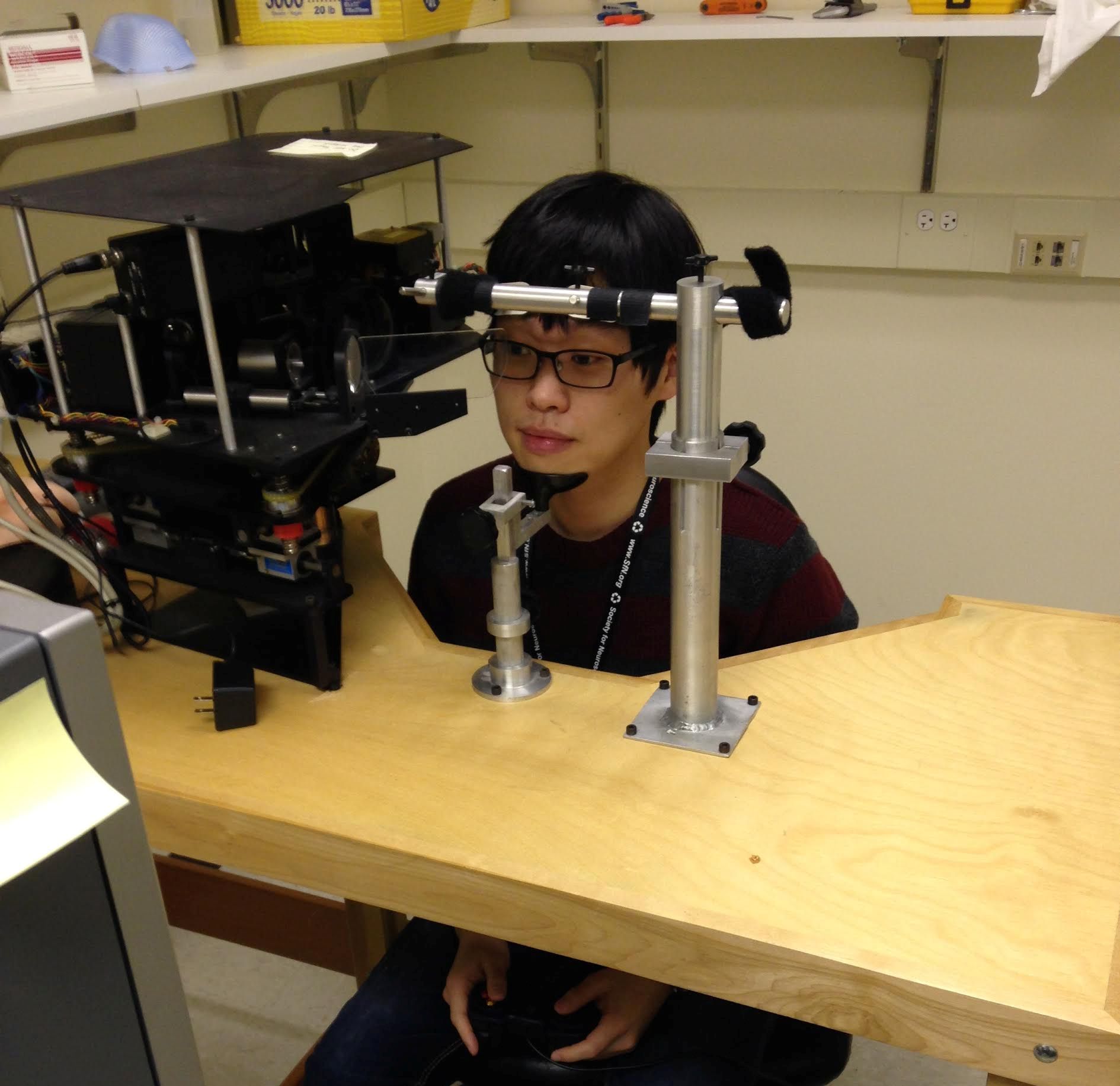

Alex in the eye tracker playing the game |

Project Overview

Little is known about how the brain processes visual motion information under natural conditions, or about how moving scenes engage eye movements. When the head is still, gaze patterns are sequences of smooth eye movements that track visual motion, saccadic jumps that aim the retinal fovea at different elements of the scene, and fixations that hold gaze steady on an object of interest. While we can study all of these behaviors in the laboratory using tasks designed to isolate each form of eye movement, we ultimately want to know how all of these behaviors are coordinated during natural viewing. Specifically, we would like to be able to predict what the eye movement is likely to be given the current retinal inputs and the current state of the eye. So far, efforts to model gaze behavior during the viewing of Hollywood movies has been largely unsuccessful. This is likely due to the fact that the cognitive state of the viewer was poorly controlled which created large variation in gaze patterns. Our approach is to simplify the visual scene to the point where we can more easily identify which objects are engaging visual attention. To that end we have developed a Python-based video game environment inspired by Atari's Pong, one of the original video games.

Pong is a "tennis" game which, in our hands, involves a 3 sided arena, a ball that bounces off the arena walls, and a paddle that the player moves with a controller. Subjects play the game while sitting in an eye tracker that monitors the direction of gaze in the right eye. The tracker we use is a Dual-Purkinje Image infrared tracker (Ward Electronics) that has arc-minute resolution and a very fast response time that allows us to follow both smooth pursuit eye movements and saccadic jumps in gaze position. We find that the game engages the viewers attention and creates across-subject similarities in patters of gaze behavior. The game simplifies the task of relating gaze behavior to the moving scene, allowing us to develop a model of target-eye interaction.

What we find is that is isn't only what the ball just did, but what we extrapolate the ball will be doing in the near future that determines where we are going to look. That makes sense - it takes the brain 100-300ms to process the incoming visual information and generate commands to move the eyes. If we waited for that data to make a movement, our eyes would always be behind the the ball. To counteract sensory processing delays the brain has to form predictions - to learn how the target is likely to move in order to extrapolate where it will be in the near future. We can see evidence of this prediction in the movements of athletes. Tennis and baseball players begin their swings while the ball is in flight, anticipating where and when they will make contact. In our lab, we can measure information about future target motion in the Pong player's eye movements. Because we control the physics of the game environment, we are able to manipulate how far into the future the ball's movement can be predicted -- by adding virtual turbulence that might deflect the direction of ball motion, changing the ball speed, or creating variations in how the ball bounces off the arena walls. Experiments are underway to explore predictive gaze behavior and whether it adapts based on how predictable target motion is.

This research may lead to better diagnoses and a better understanding of diseases that affect brain function by detecting impairment in a subject's ability to incorporate prediction into gaze

behavior. Neurodegenerative diseases can impair how well recent experience is used to modify behavior, particularly those involving disorders of the basal ganglia. Learning rates in motor tasks

are slower in patients with Parkinson’s disease, and patients adapt less completely to changes in sensory feedback than do normal subjects. Eye movements are particularly sensitive to diseases

and conditions that affect brain function - in fact changes in gaze patterns are being used to help diagnose and differentiate between neurodegenerative disorders in their early stages before large

scale movements are impaired. With a better understanding of healthy gaze behavior, we hope to expand our research to patient populations with the goal of developing clinical tools for early

detection of neurodegenerative disorders.

Citation

S.A.Lee, M.Battifarano and L.C.Osborne(2014). Target motion predictability determines the predictability of gaze decisions from retinal inputs.

Poster presented at the annual meeting of the Society for Neurosciences in Washington DC, Nov. 2014

Poster presented at the Brain Research Foundation's Neuroscience Day in Chicago IL, Jan 2015

Abstract

2. Shared sensory estimates for human motion perception and pursuit eye movements

|

|

Citation

T.Mukherjee, M.Battifarano and L.C.Osborne (2015).Shared sensory estimates for human motion perception and pursuit eye movements

Poster presented at the Brain Research Foundation's Neuroscience Day in Chicago IL, Jan 2015

Abstract

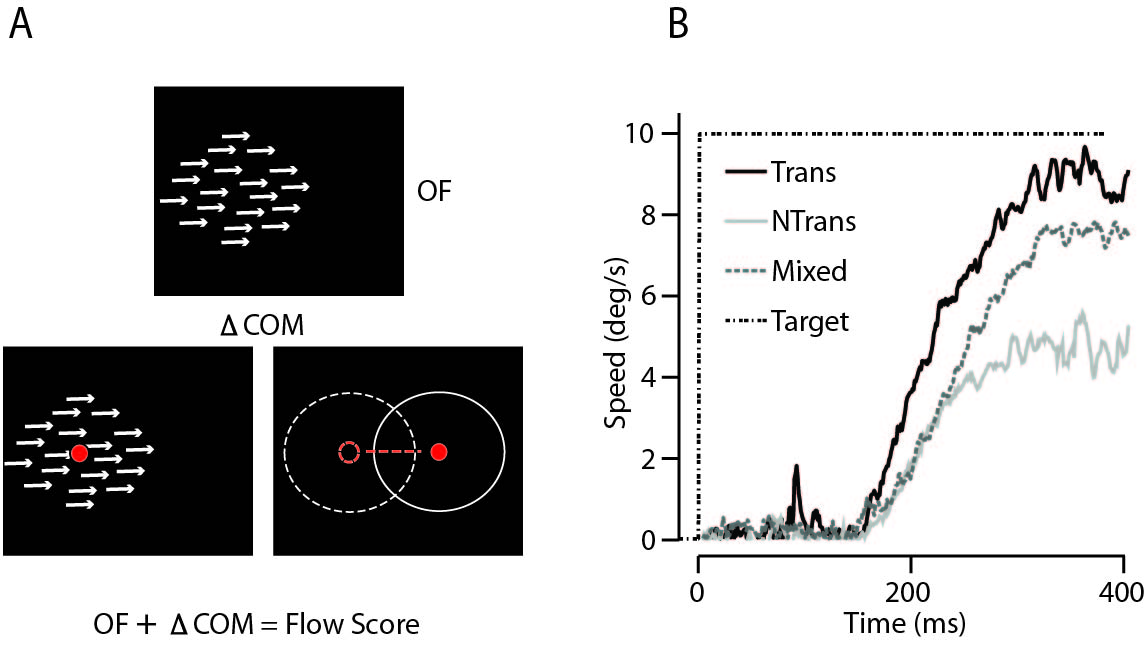

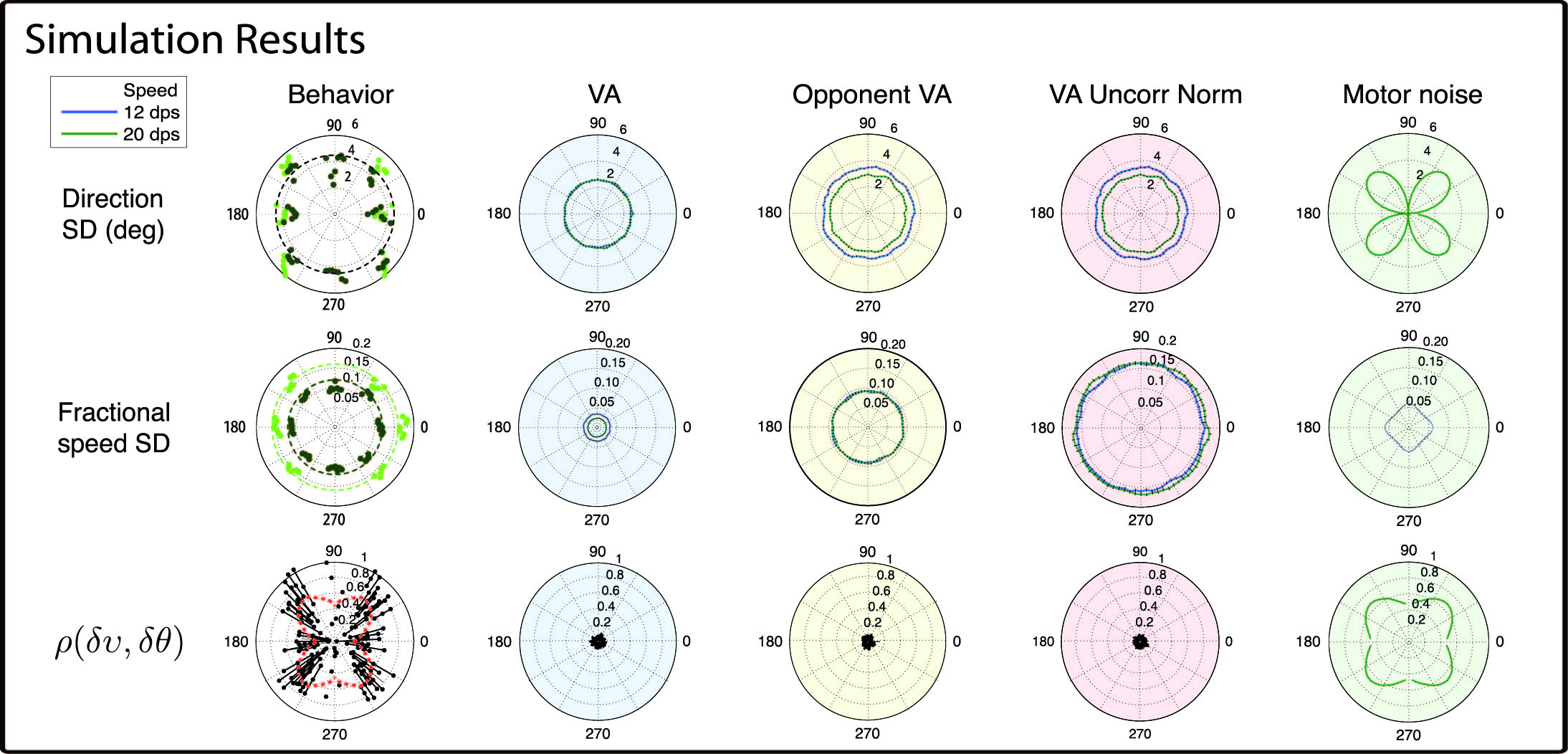

3. Constraints on decoding of visual motion parameters for smooth pursuit from cortical population activity

|

Citation

M.Macellaio and L.C.Osborne(2015) Constraints on decoding of visual motion parameters for smooth pursuit from cortical population activity.

Poster presented at the annual meeting of the Society for Neurosciences in Washington DC, Nov. 2014

Poster presented at the Brain Research Foundation's Neuroscience Day in Chicago IL, Jan 2015

Abstract

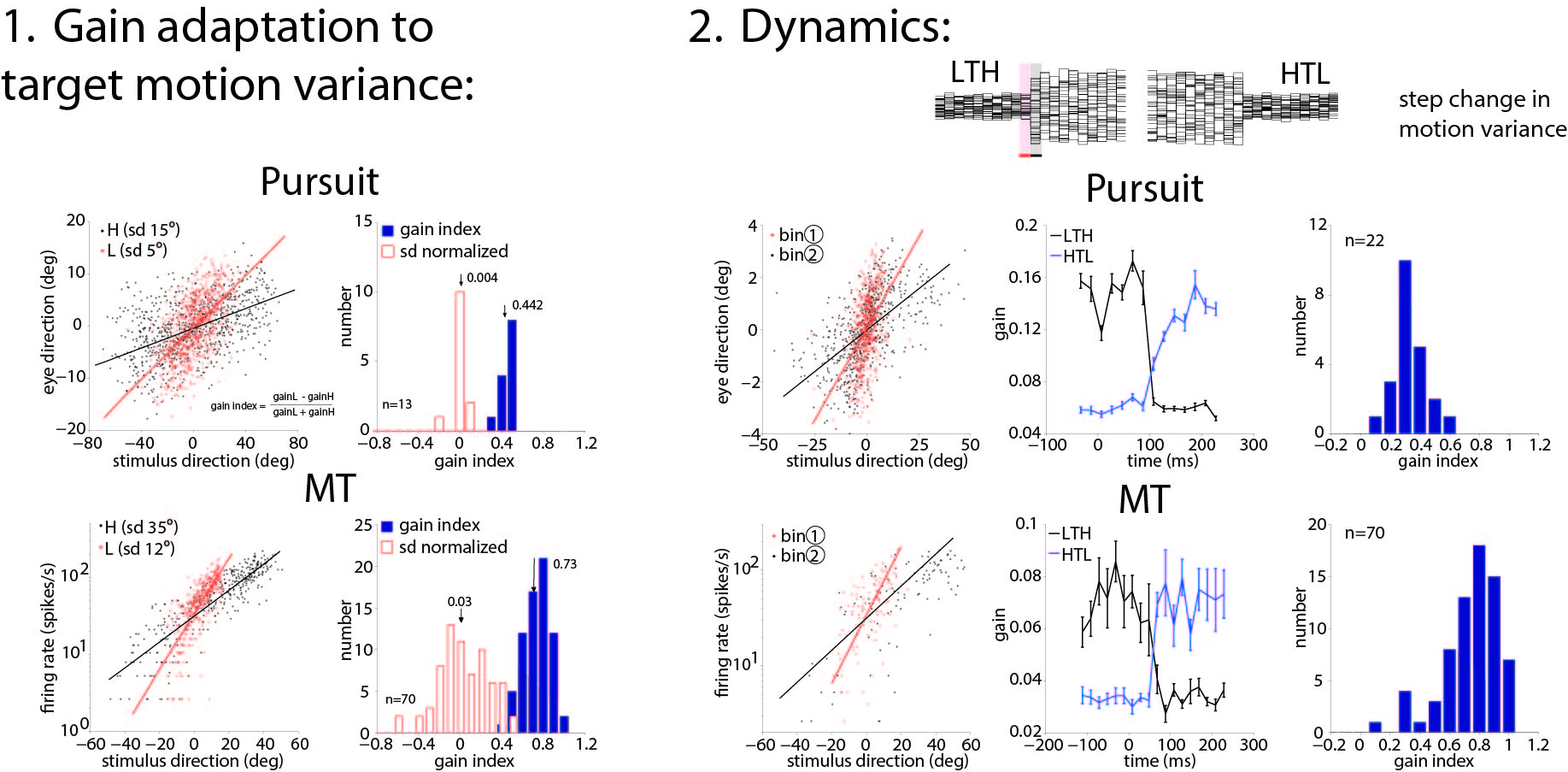

4. Efficient coding of visual motion signals in area MT and smooth pursuit

|

Citation

B.Liu and L.C.Osborne(2015) Efficient coding of visual motion signals in area MT and smooth pursuit.

Poster presented at the annual meeting of the Society for Neurosciences in Washington DC, Nov. 2014

Poster presented at the Brain Research Foundation's Neuroscience Day in Chicago IL, Jan 2015

Abstract

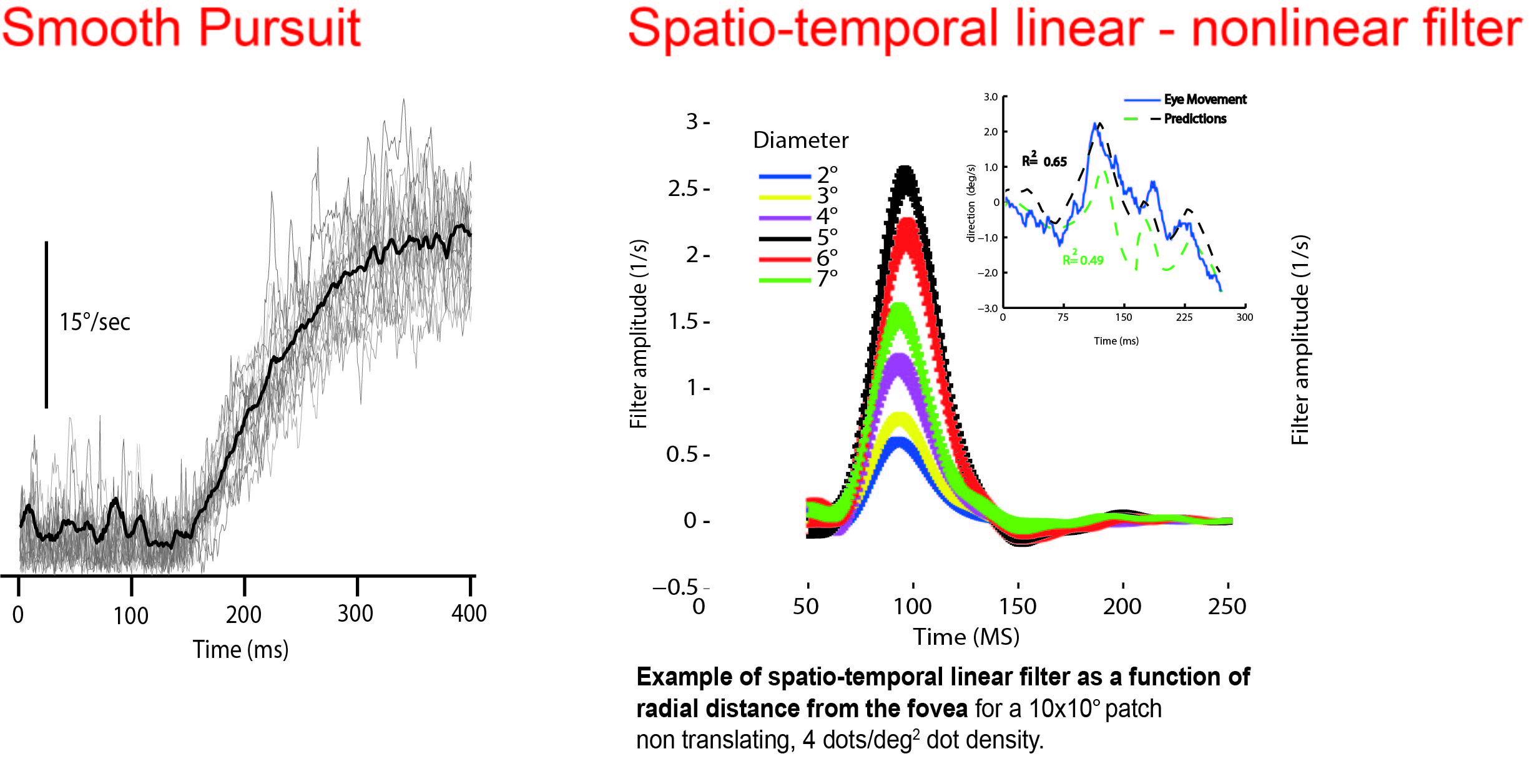

5. Spatial integration of visual motion signals for smooth pursuit eye movements

|